12 Feb 2019

Demystifying AI for expert networks

I didn’t notice anything strange about the email. Written in a colloquial tone, it even mentioned Inex One and my job title at the company. The sender, Robin, was a sales person from Qualifier.ai, promising me smart tools for reaching new customers. Charmed by the personal, friendly tone and curious about their technology, I agreed to a meeting, conveniently scheduled through Robin’s automated calendar.

It wasn’t until a few days later, when I greeted Robin for our meeting that it dawned on me: he had used his own software to get to this meeting. He probably didn’t even do much other than show up, since his bots handled both lead generation, emailing and scheduling. Robin didn’t need to do much more – he “got me at hello” and left with a promise of a paid trial. Genius.

Genius. Or is it?

Our exchange helped further my insight into the expert networks applying AI or machine learning to expert sourcing (if you want a short overview of different business models for expert networks, see this article).

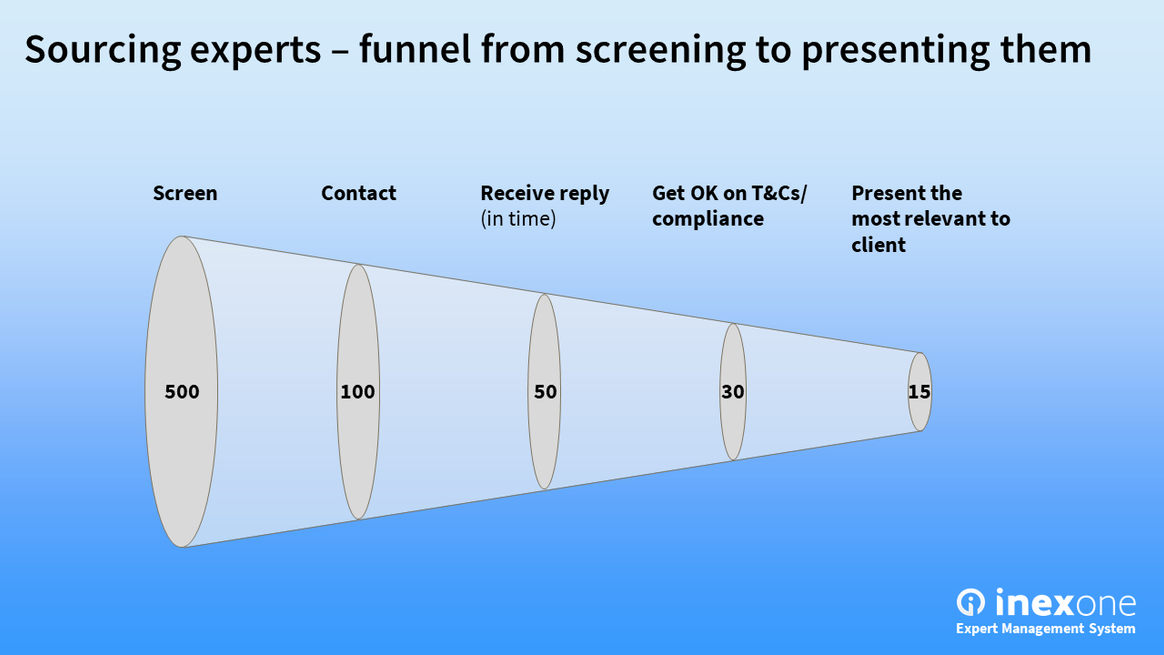

Over the past few years, we’ve seen a handful of these “machine-driven” expert networks establish, claiming high growth and efficient operations. In the Expert Network Directory, we list all that we come across. As of Feb 2019, the five are Techspert, NewtonX, Chime Advisors, ProSapient and Monocl. They have together raised more than $7 million of VC funding, so they should be on to something. To understand what this something is, let’s revisit the funnel originally shown back in November.

Expert Networks sift through large numbers of expert profiles and try to get as many as possible through the funnel and on the phone with their client. A human might screen a few hundred profiles for a request, before she gets tired or distracted. Now let’s say this human lives in the 1990’s when a state-of-the-art CRM looked like this:

Let’s say you collect 10,000 business cards, by meeting four interesting persons each day for seven years. Your Rolodex would be loaded, but from a population of 6 bn potential experts, it’s still a very small (and rather biased) sample.

Fast-forward to 2007, when GLG raised $200 million to monetize their computerized rolodex. Their associates could wiz through an internal database that contained more than 175,000 expert profiles. At the time, GLG recruited experts through referrals and at industry fairs, as told by a senior expert in this interview.

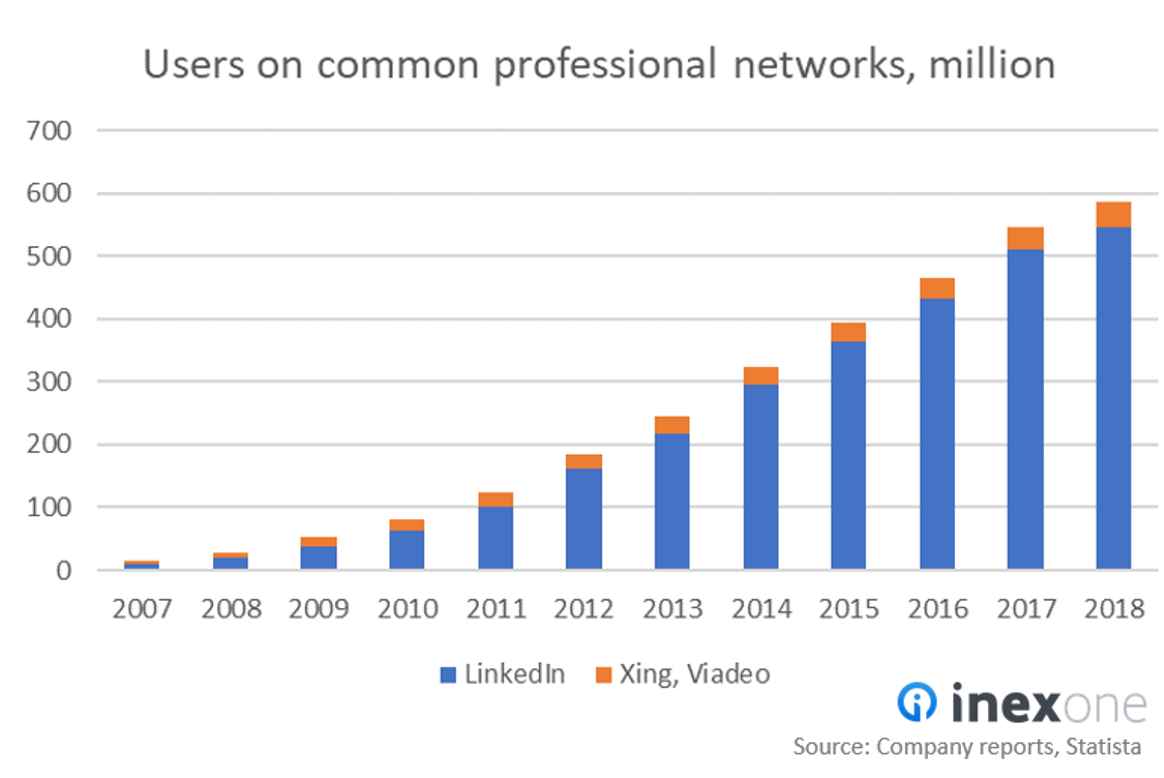

2007 happens to be the year that LinkedIn turned profitable. Another decade onwards, its growth allowed firms like AlphaSights, Third Bridge, Atheneum, Dialectica and Guidepoint to outcompete GLG by focusing strictly on custom recruiting over the Internet.

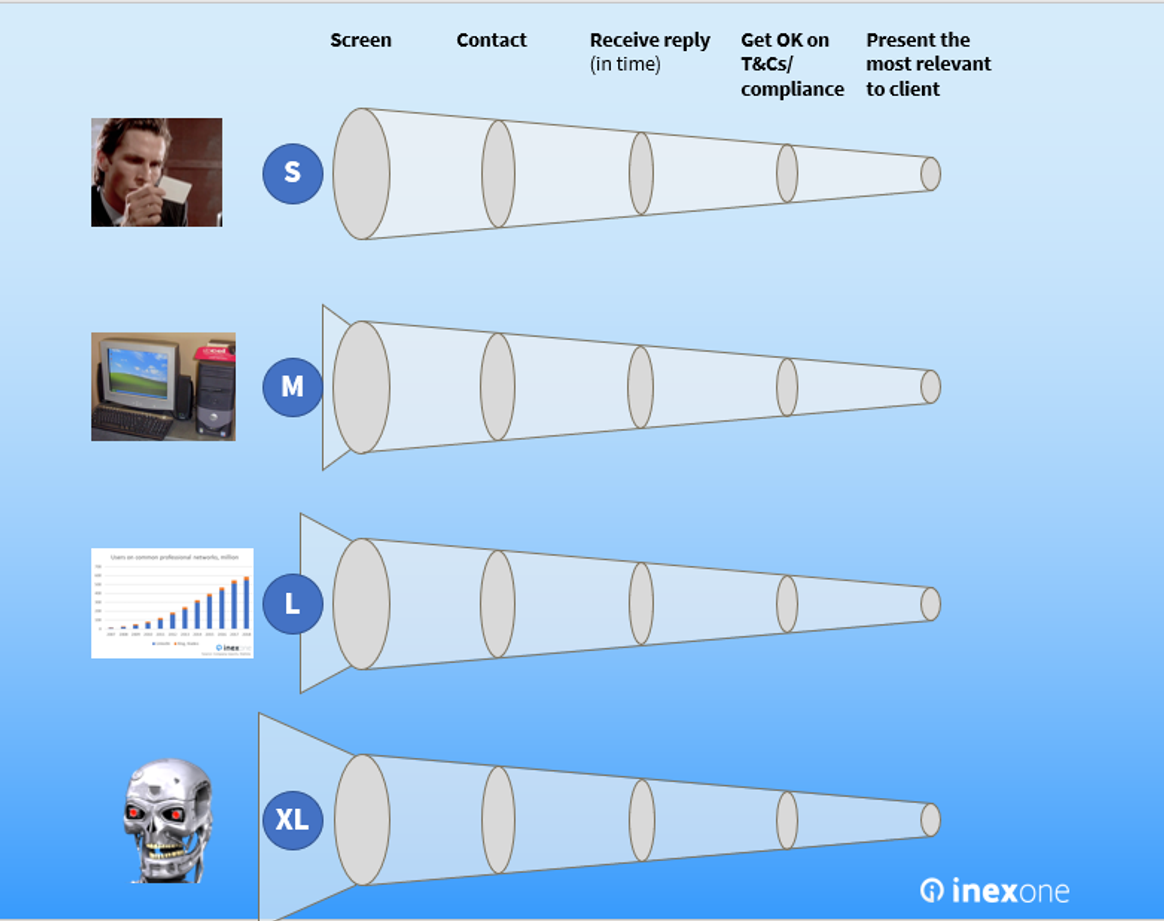

The funnel presented above remains the same for all years, since there is always a need to double-check relevance, availability, compliance, and the expert’s interest in doing a call with the client. It is instead what goes into the funnel that changes:

The Available Universe of Experts

In the past millennium, the available universe was limited (S). It grew significantly when digitized (M) and significantly more when experts were sourced on the open web (L). Logging onto LinkedIn, Xing etc., the analyst meets a random sample of potential experts, and then runs queries to generate her gross list of the most relevant ones.

Now, what is interesting about the machine-driven expert networks is the potential to run queries in multiple databases in parallel (XL). Instead of having an analyst manually search for it, an “AI” could theoretically compile a gross list of the most relevant experts, according to certain criteria. The analyst would then apply her human intelligence to this list, selecting which experts to proceed with. If this machine-generated gross list is any better than random, the expected value and relevance of presented experts is higher. It’s beautiful in theory.

A side note

When I co-founded the expert network Previro, we planned to be machine-driven. We never did, however, partially due to new personal data legislation (see our GDPR series) and the unclear lawfulness of scraping proprietary databases. The other reason, I must admit, was that I hadn’t yet banded with my 1337 colleagues Mehdi and Filip.

The beautiful machine

Let’s now go one step further and answer a few questions:

Are Machine-driven better than regular expert networks?

If yes, then what is the source of this advantage?

By the way, what is AI and machine learning, really?

We will approach these questions one by one, starting from the bottom:

Question 1: What is AI and machine learning?

This segment has been fact-checked by AI expert Björn Hovstadius, co-Director at RISE Artificial Intelligence, board member of the Big Value Data Association, co-founder of SaaS platform Pagero, and an investor in Inex One.

These two terms are often used interchangeably, although they are quite different. Machine Learning is a branch of artificial intelligence (AI), specifically aimed at optimizing algorithms for prediction (regression), classification, clustering or anomaly detection. Improving capacity for data storage and computation allows data analysts to train their algorithms on ever larger data sets, thus developing them further.

There are numerous business applications for these algorithms, but here’s a simple example: predicting whether a person can configure a home Wi-Fi or not.

The data analyst will need two things to start

A dataset: Let’s assume we download LinkedIn’s data

A few success cases to “train on”: We highlight 100 persons in the dataset, who are all great at configuring Wi-Fi’s.

We’ll then run the algorithm on our dataset, asking it to identify common attributes among the Wi-Fi masters, and suggest similar profiles. So far, it’s all simple regression: Machine learning is the concept of adding a feedback loop, to tell the algorithm when its predictions are correct or incorrect. This allows the machine to update its algorithms dynamically.

Side note: great pieces on AI and ML:

– A bit more technical: Using ML to predict the quality of wines

– Most common ML algorithms: A tour of the top 10 algos for ML newbies

– A really cool example of generalization error, from the quants at Quantopian who backtested 888 trading strategies

The richer the data your algorithm can train on, the more accurate your algorithms will be when applied to new cases. If your training data is limited, you are exposed to the common “generalization error” where the algorithm simply hasn’t learned what factors really correlate with success (in our case, Wi-Fi skills).

Artificial intelligence is the overarching concept of having machines that can act in ways that match human intelligence. In that sense, the concept of AI is elusive: my cell phone can perform calculations that required human brains only a few decades ago; however today, no one would claim that it has artificial intelligence.

Most AI companies are thus in fact applying statistical methods with a feedback loop, i.e. different types of machine learning (ML), so I’ll use the latter term going forward. It’s not magic, but still pretty interesting.

Question 2: What is the source of advantage for machine-driven networks?

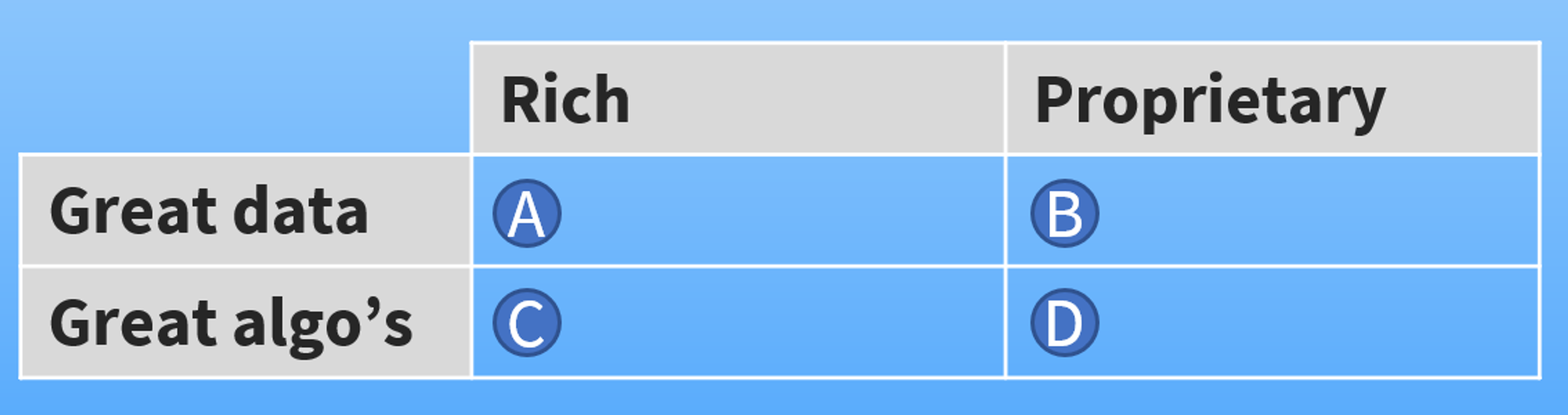

Since ML businesses are about applying regression models to data, there are two things that can make them successful:

Great data that is rich and proprietary

Great algorithms that are proprietary and trained on rich data

Great Data: rich and proprietary

Great datasets are rich in content. Think of an excel spreadsheet with multiple rows that each have multiple attributes registered in the columns. Whereas Rolodexes were limited, today’s professional career databases are very rich. Figure out how to copy them all and combine them, and you will have a great dataset – especially if you can resolve duplicates and overlaps.

Here is a first problem: how do you obtain this data? Companies like LinkedIn make a living off of selling access to tranches of their data*, they don’t want you to just take it all (see Terms or this court case). These machine-driven expert networks seem to have found a workaround. NewtonX and ProSapient didn’t reply when asked about the details, but their privacy policies (all retrieved 5 feb 2019) make it clear that they do some crawling/scraping of multiple databases, giving them rich data:

“We may collect or obtain your Personal Data that you manifestly choose to make public, including via social media (e.g., we may collect information from your social media or business networking profile(s)).” – NewtonX Privacy Policy

“It will also crawl other public sources of information about you to further learn about your background […] sources such as Companies House and the Electoral Register based inside the EU.” – ProSapient Privacy Policy

“[…] through publicly available sources including: Professional social media and networking sites; Corporate websites; Academic journals; Clinical trial websites; Research laboratory websites; and Other online sources.” – Techspert Privacy Policy

That takes us to the second attribute of great data: whether it is proprietary or not. Scraping other companies’ databases does not lead to a proprietary dataset. Although the combination of multiple scraped datasets could lead to a richer final dataset, your ownership of it is likely questioned. Also, GDPR will be a challenge.

Great Algorithms: proprietary and trained on rich data

If you manage to obtain a rich dataset, you’re well underway to create a great algorithm. Today there are many DIY tools where you plug in your data and target variable and get back the attributes with the highest correlation as an output. Over time and multiple iterations, you should establish certain formulae that are generally applicable. For example, your algorithm might say that experts knowledgeable about Wi-Fi tend to have the following attributes:

Age: <45 years

Education: Technical diploma

Interests: Video games

Keywords: “Wi-Fi”, “Router”

This can be cool, especially if your data is very rich and contains many success cases, where your client confirms that “indeed, this person is great at Wi-Fi configuration”. Such success cases are also most likely proprietary, making your algorithms more unique and proprietary.

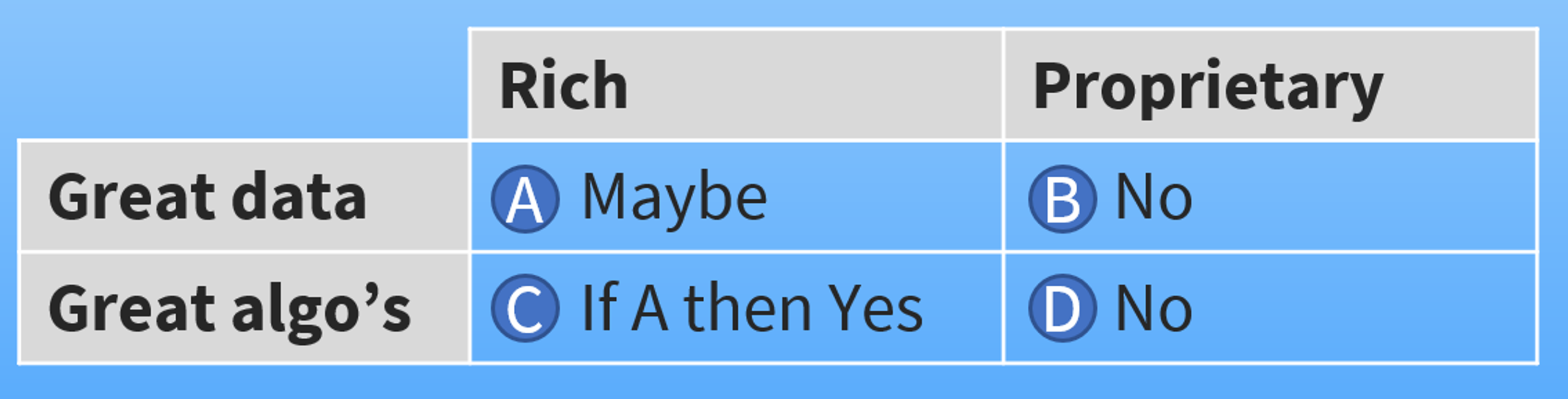

I’ll summarize the above in a matrix:

If I were to start a new machine-driven expert network today, here is what I will get:

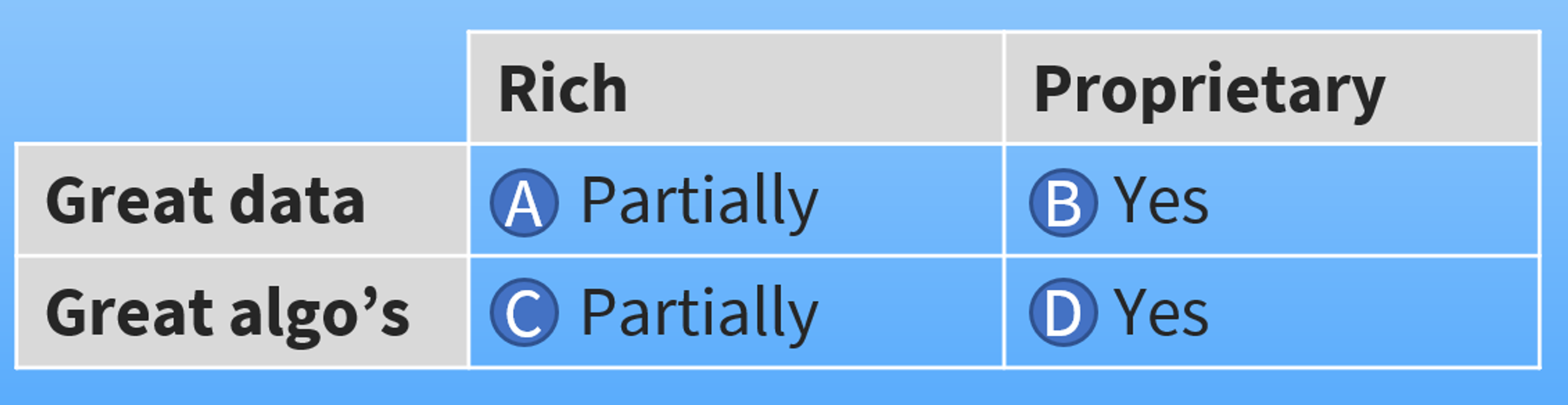

If I were to instead use the database of one of the larger expert networks as training data, I would get:

Now this is interesting, and leads to an obvious recommendation to Atheneum, Third Bridge, GLG, Guidepoint, AlphaSights, Capvision and Coleman: Hire a couple data scientists to work on the data you have lawfully collected. This is your database of experts matched to client projects, and clients’ feedback on the calls. Some networks claim to have databases of 500,000 experts. It’s only 0.1% of the total profiles on LinkedIn, but it is a good start – it’s proprietary (experts have given consent), and client feedback gives you valuable “success cases”.

Qualifier.ai registered their company only 12 months before selling their solution to me, so it cannot be too hard with the right people onboard.

Now back to our question: What is the source of advantage for machine-driven networks? The answer is: none, unless you can lawfully obtain great data, in which case you still need “success” cases to train your algorithms on. This also answers the final question: Are machine-driven networks better?

Business is great for Robin at Qualifier.ai, since his algorithm is pretty simple and effective. His business works even with broad attributes – meaning he would have found me and other relevant leads with something like this:

Title: “CEO” or “Sales Manager”

Company: “Any”

Country: “Sweden”

These attributes are tangible and easy to optimize for. Also, LinkedIn makes this data available on Google for most profiles, so Robin isn’t breaking any user agreement. Now try to find the expert at assessing the industrial vacuum cleaner market in India, as discussed here. This is significantly more differentiated, unstructured, and difficult to train an algorithm on.

Search engines v. feedback loops

When I showed this article to our CTO Mehdi, he pointed out the difference between a good search engine and ML with feedback loops.

– A good search engine is capable of natural language processing (meaning it adjusts for the fact that some people use “hoover” instead of “vacuum cleaner”). This is available plug-and-play from e.g. https://www.algolia.com/

– A good feedback loop requires frequent replications of similar queries. When Google guesses what kind of display ads might be relevant to you, they do so because they have an amazing feedback loop. It’s the millions of people actually clicking the ad or not – that’s the success data that the machine is “learning” from.

For a machine-driven expert network, the “learning” part would be either:

– whether the analyst got interested in a profile or not

– whether the client got interested in the profile afterwards (a bit harder to collect)

Both events are comparably infrequent, and each client project is somewhat unique (it’s subject matter experts, after all). This makes it difficult to collect helpful success cases to learn from – especially if you go after every geography and industry niche at the same time.

So, it’s all good for Robin – but is it Game Over for machine-driven networks?

Not at all, there is ample space for specializing. In recent years, the expert network industry has seen a boom, with high growth for both incumbents and new entrants (see article). A year ago, we initially raised the question about fragmentation versus consolidation. Since then, we’ve found specialized networks such as Candour in the energy sector, visasQ for Japan, Bioexpert Network for biotech, and – curiously – CognitionX for AI topics.

Inex One believes that this development is good. Specialization will lead to more efficient and faster identification of relevant experts, reducing transaction costs caused by inefficient operations. Specialized networks can thrive by developing rich, proprietary databases and algorithms in their respective niches, where they are unbeatable. Inex One facilitates the match-making between these specialized networks and the organizations who seek their services.

Click here to try Inex One for your organization.

PS. Do look out for my automated emails over the coming weeks. If you receive one and tell it apart from a “human email”, you’ll get 3 months free usage of Inex One.

Footnotes:

* That is what LinkedIn does when charging $800/year for Sales Navigator, which is popular among headhunters and expert networks.

Robin is a fictive name